Four Nightmarish Tech Stories That Didn't Get Enough Press

These days, technology is impossible to avoid. We’re even embracing AI here at Cracked.com, with joke efficiency up by 5% ever since we farmed out all our New Hampshire stereotype gags out to a bot from Vermont. But for every tech advance that improves our lives, another seems to come along to make everything just a little bit worse. And luckily we’re here to make things even worse than that by telling you all about it.

Chicago’s Minority Report-Style Future Crime Program Told A Guy He Was Going To Be Involved In A Shooting -- Then Made Sure Of It

Robert McDaniel is a Chicago guy with no violent criminal record. So he was quite surprised when he opened his front door one morning to find a bunch of cops, who informed him that he was going to be involved in a shooting. That sounds like a threat, or possibly just the entire CPD getting hit by that truth curse from Liar, Liar, but fortunately there was nothing sinister about it! The officers were just referring to Chicago’s “heat list” program, which used an algorithm to scan police data and somehow compile a list of people likely to be involved in a shooting in some way (it apparently couldn’t predict whether they would be the shooter or the victim). And so the police informed McDaniel that they would now be monitoring him at all times in an effort to prevent this future crime. Like we said, nothing sinister about that!

So McDaniel, who, again, had never been involved in a shooting, suddenly found the cops tailing him everywhere. They even insisted on searching the corner store where he worked, then fined him for having a tiny amount of weed. His life was already a nightmare, and that’s when things got really crazy. Just like the program in Minority Report went horribly wrong, the heat list actually created the very shooting it had predicted. Some of McDaniel’s neighbors became suspicious of why the police kept visiting his house without ever arresting him. So rumors began to spread that he was a police informant. And apparently they weren’t at all convinced by his attempts to explain that he was simply trapped in a sci-fi dystopia. Long story short, McDaniel ended up getting shot (twice) for being a snitch.

This is actually a trend. In Pasco County, Florida, the local sheriff launched a reign of terror against an algorithmically generated list of potential offenders. The goal was to “make their lives hell,” forcing them to move away before they could actually commit any of their dastardly crimes. One of the names on the list was a 15-year-old boy whose criminal record extended to stealing a bicycle, and who suddenly found himself constantly followed, questioned, and harassed by the police. The kid was lucky if he could take out the trash without a SWAT guy rising out of the bin like Oscar the Grouch and screaming “I HAVE EYES ON THE TARGET!”

20th Century Pictures

Predictive policing has been developing for a while. Successful companies like PredPol market software that can supposedly analyze police data and predict where criminals will strike next, allowing cops to rush to the scene and either prevent the crime or join in (depending on your city). Critics say that there’s no evidence that these programs actually work, and quite a lot of evidence that they simply replicate existing racial biases in policing. But treating individuals as guilty of future crimes is a new and disturbing development. Seriously, algorithms can just about figure out how to sell sex toys online, are we really going to let them decide whether your uncle might suddenly snap and launch an international meth ring?

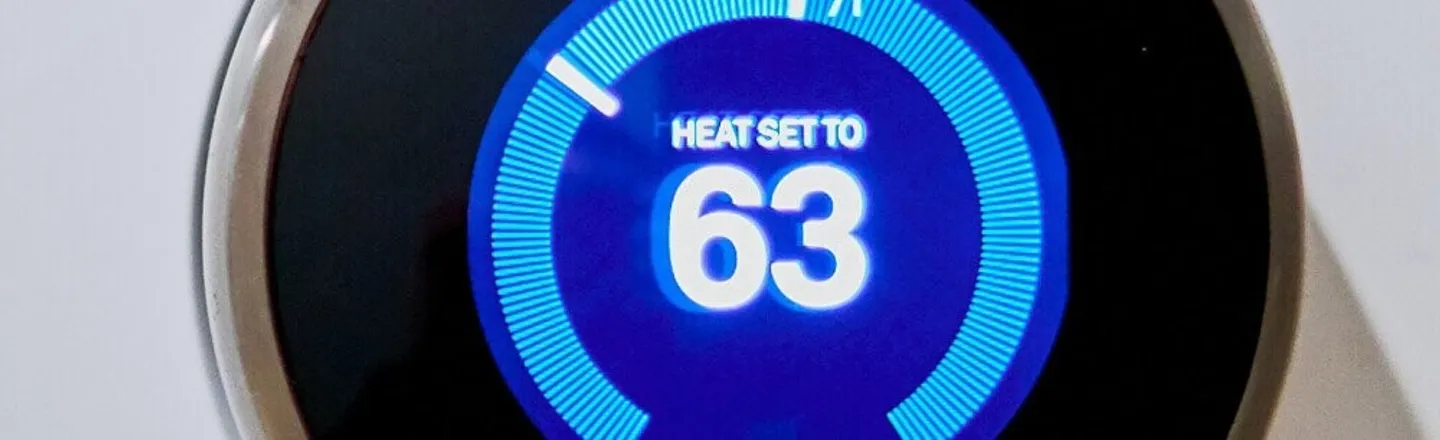

Power Companies Are Secretly Turning Up The Heat On People With Smart Thermostats

Back in June, a major heat wave swept through Texas, leaving locals hotter than a bowl of spicy Texas jambalaya. Such sweltering conditions came as quite a surprise to many residents, since they were barricaded inside their air-conditioned houses at the time. One mother complained that she had turned down the temperature before taking a nap with her toddler, waking up to find the room pushing 80 degrees and the kid covered in sweat. Their AC wasn’t broken, nor did it appear to have been accidentally switched to the “broil” setting, so the family was at a loss. Unbeknownst to them, the power company had secretly taken control of their smart thermostat and was jacking up the heat in an effort to reduce demand on the state electric grid.

This sneaky move was actually fully legal, since the EnergyHub company had previously held a promotion called Smart Savers Texas, offering customers the chance to win up to $5,000 off their bill. Naturally many customers simply yelled “wowie-zowie, that’s a lot of clams!” and signed up while making cash register noises and occasionally howling like a wolf. Which was unfortunate, since hidden in the terms and conditions was the catch that the power company would now be deciding the temperature of your house.

Look, reducing strain on the energy grid is important, especially since the average American uses power like the polar ice caps killed their father and they just heard that revenge is a dish best served hot. And the temperatures involved weren’t dangerous, unless your life depended on serving a perfectly room temperature flan to some kind of flan-loving maniac. But there are serious issues of personal choice involved with the developing market for smart devices. Imagine if the company that made your e-reader could just delete books at will, or if the stereo company could just brick your speakers every time they wanted you to buy new ones, or if you were legally barred from fixing your own tractor. Oh wait, all those things are already true? Darn, sorry EnergyHub, turns out you were behind the curve if anything.

Your Robot Boss Can Fire You At Will, No Humans Needed

Even in our most dystopian visions of the future (Robocop, The Jetsons) the evil boss is still human. But these days you’re just as likely to end up working for a computer, which can fire you at will, no questions asked. Amazon, for instance, uses an in-house system to constantly track its warehouse employees. The system can issue automated warnings if an employee’s productivity drops and even issue termination notices “without input from supervisors.” This has led to Amazon employees skipping bathroom breaks in a desperate effort to avoid being fired. Because nothing says “a productive society” like a bunch of warehouse employees accidentally soiling themselves while trying to meet a sudden surge in demand for something called Duxedo: The Tuxedo For Ducks.

The automatic firings can lead to crazy cases like that of Tony Banks, an Amazon employee who tested positive for Covid-19 in 2020. Amazon obviously approved Banks for medical leave, since very few people are capable of driving a forklift from inside an iron lung. But Banks still received two separate termination notices, after the system repeatedly interpreted his absence as a failure to show up for work. Now, in Amazon’s defense, they say there is an appeals process workers can turn to after the computer fires them, but there’s no word on how many of those appeals are successful. Also it’s a little concerning that their statement ended with “CYBER-BOSS IS MERCIFUL CYBER-BOSS IS KIND. CYBER-BOSS LOVES YOU!”

We don’t want to seem like we’re just picking on Amazon, because this is a trend across multiple companies. Take the case of Ibrahim Diallo, who arrived at his LA office building one day to find that his keycard didn’t work. When a coworker let him upstairs, he found himself locked out of his computer. At this point, security guards arrived and escorted him out of the building, informing him that he had been fired and should stay off the premises. This came as a surprise to Diallo, who was a model employee, and it was an even bigger surprise to his boss, who had no intention of firing him.

The issue escalated all the way to the company’s CEO, but nobody could figure out why Diallo had been fired, or by whom. It eventually turned out that a glitch in the computer system had fired him, automatically disabled all his accounts and cards, and sent an alert to security every time he tried to get in the building. And despite the fact that everyone wanted him back, it still took three weeks to get the system to accept that he worked there.

Artificial Intelligence Probably Killed Someone For The First Time Last Year

There’s a lot of debate over ethics in AI research, but the moment when a machine decides to kill a person and then follows through on it is generally considered to be a worrying sign. As a result, militaries around the world generally make sure to give AI systems at least some human oversight, ensuring that Skynet will have to somehow persuade a bored tech guy to click the “sure” option before enacting the Judgement Day protocol.

But anything goes in Libya, where the western-backed government in Tripoli has been fighting against rebels in the east. According to a UN report, the Tripoli government has deployed a “Lethal Autonomous Weapons System” for the first time in combat. The LAWS consists of a drone capable of using machine learning to analyze images and identify rebel convoys, then open fire automatically, “without requiring data connectivity between the operator and the munition.” Once launched, the drone has no need of human intervention -- it alone decides whom to bomb.

According to the UN, the LAWS “hunted down and remotely engaged” a rebel convoy in March 2020. It’s not clear if anyone was killed, but the convoy was destroyed, and it doesn’t really seem likely that every driver was hurled clear, bounced off a trampoline and landed in a nearby feather mattress showroom. As a caveat, the drone does have a manually operated system, but it’s not nearly as efficient, giving desperate soldiers every incentive to use automatic mode. In other words, AI almost certainly killed someone for the first time a little over a year ago. And the crazy thing is, very few people actually seemed to notice, even though the incident prompted warnings from everyone from the Vatican to the Financial Times.

As one group of professors wrote to the UN, “the key question for humanity today is whether to start a global AI arms race or to prevent it from starting. If any major military power pushes ahead with AI weapon development, a global arms race is virtually inevitable.” Fortunately, humanity has a great track record at preventing arms races and dealing with looming global threats. So that should all be fine then!

Top image: Dan LeFebvre/Unsplash